Abstract and Introduction:

With the boom of industry 4.0 and IoT, connectivity between edge IoT devices and Cloud is rapidly gaining importance. There are billions of devices out in the market and not all computation can be done at the edge. Talking specifically about edge IoT devices, MQTT is a widely used protocol for communication and there are cloud solutions like GreenGrass that would send logs to IoT Core. Sending Data to IoT Core it's just the start, the data can be leveraged in endless possible ways like sending it to Athena, New Relic and many more. We are going to cover a very simple use case here which is sending it to S3 bucket. Let's get started with understanding of IoT core and kinesis firehose.

IoT Core:

AWS IoT Core lets you connect IoT devices to the AWS cloud without the need to provision or manage servers. AWS IoT Core can support billions of devices and trillions of messages, and can process and route those messages to AWS endpoints and to other devices reliably and securely. With AWS IoT Core, your applications can keep track of and communicate with all your devices, all the time, even when they aren’t connected. %[aws.amazon.com/iot-core/][1]

Kinesis Firehose:

Amazon Kinesis Data Firehose is the easiest way to reliably load streaming data into data lakes, data stores, and analytics services. It can capture, transform, and deliver streaming data to Amazon S3, Amazon Redshift, Amazon OpenSearch Service, generic HTTP endpoints, and service providers like Datadog, New Relic, MongoDB, and Splunk. It is a fully managed service that automatically scales to match the throughput of your data and requires no ongoing administration. It can also batch, compress, transform, and encrypt your data streams before loading, minimizing the amount of storage used and increasing security.

Implementation

Step 1:

On your AWS console, navigate to AWS Core Services When you are in IoT core, on the left hand side expand on "Act" and click on Rules.

Step 2:

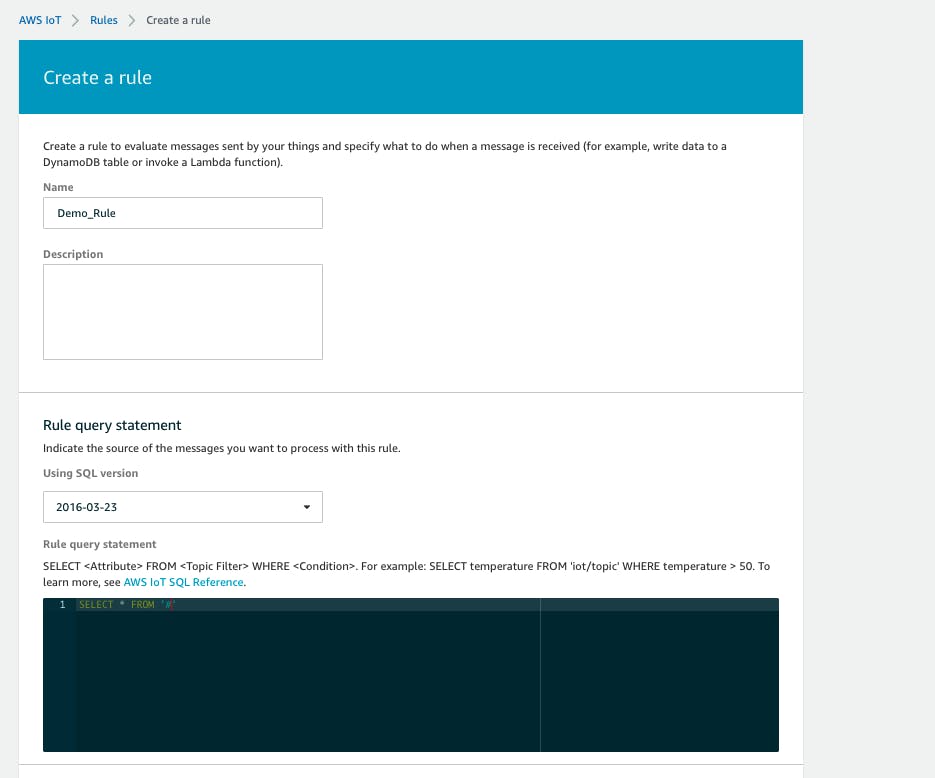

Provide a rule name and a query attribute in the statement . In the below screenshot, my rule name is Demo_Rule and the MQTT topic I have subscribed is iot/topic. If you want to subscribe to all topics, your query should be SELECT * FROM '#'

Step 3:

Click on Add action, and select Send a message to an Amazon Kinesis Firehose Stream

Step 4:

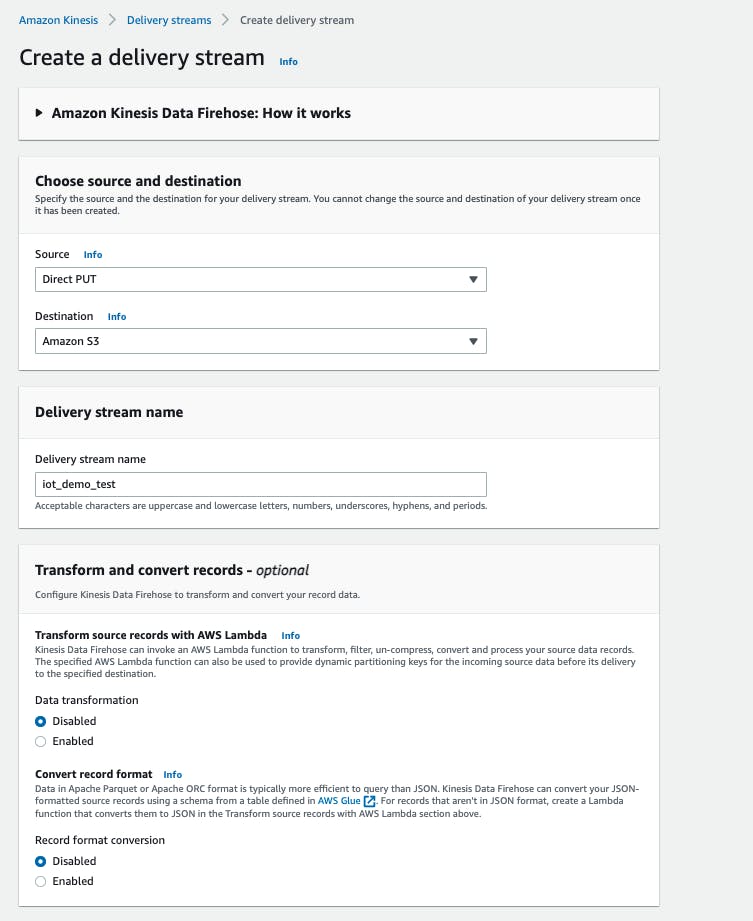

Kinesis Firehose delivery Stream. You can select an existing Kinesis Firehose delivery stream or you can create a new one, in this blog I am creating a new one.

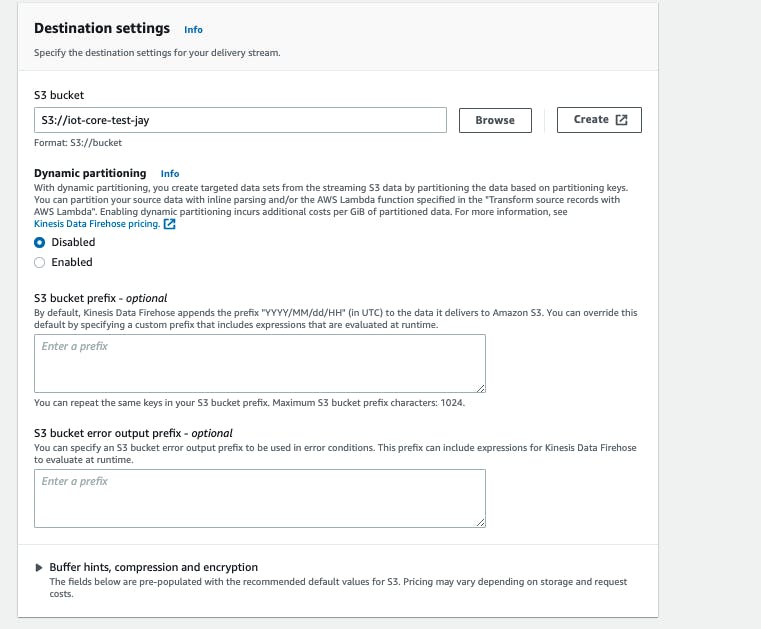

Source: Direct PUT Destination: S3 Delivery Stream Name: iot_demo_test (please customize as required) Destination Settings: Provide details on the name of S3 bucket and prefix. (You can also adjust the buffer size as required) & Click on Create delivery stream.

Step 5:

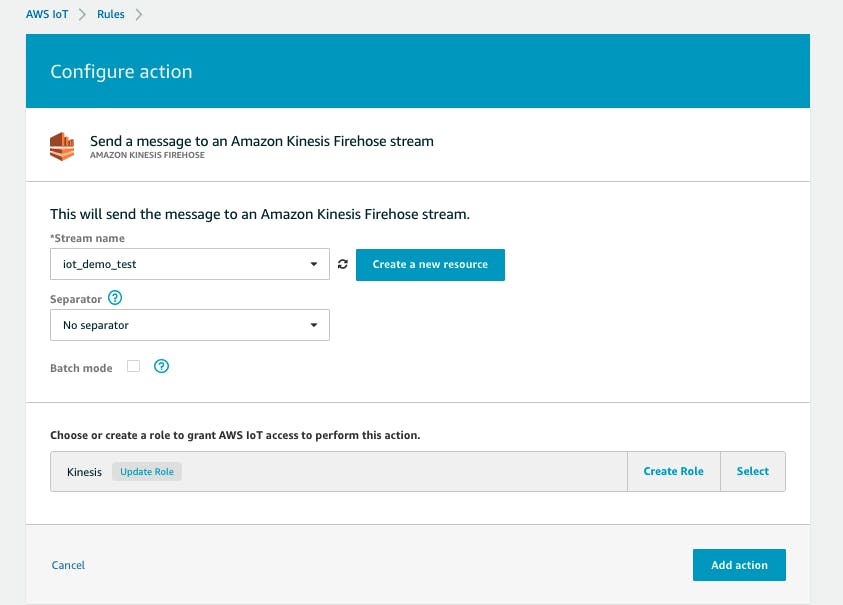

Assign Role to your IoT Rule and complete creation of IoT Rule

Testing :

Under Test with demo data, choose Start sending demo data to generate sample stock ticker data. Follow the onscreen instructions to verify that data is being delivered to your S3 bucket. Note that it might take a few minutes for new objects to appear in your bucket, based on the buffering configuration of your bucket. When the test is complete, choose Stop sending demo data to stop incurring usage charges.